Learn what content decay is, how to detect it with concrete metrics and tools, and follow a step-by-step playbook to revive and prevent lost organic traffic.

Content decay is the sustained drop in organic clicks, rankings, and engagement that quietly erodes pipeline and raises acquisition costs.

If you’re properly watching leading indicators in Google Search Console and GA4, and you can validate technical health with Core Web Vitals and index coverage, you quickly figure out what is normal fluctuation and what is actual structural loss.

You can spot decay by comparing short windows against long-term baselines, then diagnose whether demand fell, rankings slipped, or CTR collapsed due to SERP features. Map causes to focused fixes such as refresh, expansion, consolidation, pruning, or technical repairs, and support updates with internal links, structured data, and re-promotion.

Govern the process with thresholds, alerts, owners, and SLAs, and prove lift with recovered clicks, position deltas, conversions, and ROI versus net-new content. Expect tradeoffs: seasonality, algorithm updates, cannibalization, and indexing issues complicate the picture.

A slight rank loss can cut clicks in half, as position one averages about 27.6 percent CTR, while position three captures roughly 11 percent. Start by defining a 28-day rolling baseline per URL, setting alert thresholds, and scheduling a monthly review so declines never surprise you.

TL;DR — How to Stop Content Decay

Content decay is the gradual drop in organic traffic, rankings, and engagement on pages that once performed well. It silently kills pipelines and inflates acquisition costs if you don’t detect it early.

👉 Detect it:

- Compare 28-day vs 90-day (and YoY) baselines in Google Search Console and GA4.

- Watch for clicks down ≥ 30%, CTR down ≥ 25%, or positions down ≥ 2 spots on key queries.

- Validate technical health (Core Web Vitals, indexing, internal links).

👉 Diagnose it:

- Demand loss: fewer impressions = topic interest shift.

- Ranking loss: impressions stable + positions down = competitors overtook you.

- CTR loss: position stable + CTR down = SERP features or weak snippets.

- Tech regressions: slower LCP/INP, bad canonicals, broken links.

👉 Fix it:

Refresh outdated stats, visuals, and CTAs.

Expand depth with new sections, FAQs, and schema.

Consolidate overlapping pages with 301s.

Prune obsolete or zero-value URLs.

Repair technical debt (speed, indexing, redirects).

Re-promote improved assets via internal links, outreach, and social.

👉 Measure & prevent:

- Track recovered clicks, CTR, and conversions vs baseline.

- Automate GSC/GA4 alerts for 28-day drops.

- Run monthly scans and quarterly deep audits.

- Govern with clear owners, SLAs, and version control.

Continuous refreshes extend the life of winning content and protect ROI. Even small rank losses can cut clicks in half, so build decay detection and updates into your content operations—not as a rescue, but as routine maintenance.

Table of Contents

- What content decay is and why it matters

- The five lifecycle phases of content (with short examples)

- Common high-level causes of content decay (competitor content, intent shifts, algorithm updates, technical regression)

- Business impact: lost leads, lower revenue, wasted content investment

- When decline is normal vs when it needs action, how to detect content decay, and which metrics indicate it

- How to do a content audit to detect content decay

- 1. Choose primary metrics to watch

- 2. Track defensive quality signals: Core Web Vitals, speed, and indexing

- 3. Set time windows and baselines (90 days vs 12 months, seasonality)

- 4. Define concrete thresholds and rules of thumb for alerts

- 5. Run quick detection in Search Console, GA4, and simple logs

- 6. Use in-browser and CMS signals (extension and CMS search stats)

- 7. Automate alerts: scheduled reports, anomaly detection, and diffs

- 8. Next: diagnose root causes with data and tests

- How to diagnose the root causes of decay

- interpret metric patterns (CTR down vs impressions down vs position down)

- Competitor analysis: identifying new/better competing pages and SERP feature shifts

- Intent shift detection: query analysis, SERP intent mapping, and search feature changes

- Technical regressions: CWV, indexing, canonical mistakes, duplicate content, and redirect errors

- Internal causes: keyword cannibalisation, poor internal linking, content rot, or outdated facts

- Algorithm updates, manual actions, and their fingerprints

- Decide whether to update, consolidate, prune, or rebuild based on the diagnosis, a precise mapping from cause to repair steps

- A prioritised playbook to fix decaying content

- 1. Triage framework: impact (traffic/conversions) × effort (hours/complexity) to rank pages for action

- 2. Refresh: update facts, stats, multimedia, publication date handling, internal links, and CTAs

- 3. Expand: add depth, related keywords, FAQs/schema, examples, and data where intent broadened

- 4. Consolidate: merge thin/overlapping pages, implement 301s, update backlinks, and internal links to preserve equity

- 5. Prune: when to remove pages (low value, regulatory risk) and safe pruning patterns

- 6. Technical fixes: CWV tuning, canonical/redirect chains, robots/indexing fixes

- 7. Re‑promote and rebuild authority: outreach, internal link boosts, paid social, and link reclamation

- 8. Experiment and validate: content A/B tests, compare CTR and positions over 4–12 weeks

- 9. Choose between update, redirect, or delete using the decision trees and risk checklist

- Measure impact, automate detection, and build governance

- 1. KPIs to report: recovered clicks, impressions, CTR change, position deltas, conversion lift, ROI of refresh vs. new

- 2. How to calculate ROI: estimate traffic-to-lead and lead-to-revenue to compare refresh vs. new

- 3. Audit cadence and SLAs: monthly scans, quarterly deep audits, owners, and ticket SLAs

- 4. Automation and alerts: scheduled GSC exports, GA anomaly detection, Clearscope/Revive integrations, StoryChief/GSC syncs

- 5. Tracking changes: version control, change logs, publish-date policies, and rollback plans

- 6. Prioritisation workflows: scoring model, ticket templates, and stakeholder sign-offs

- 7. Next: long-term prevention tactics and how the content lifecycle ties into broader growth

- How content lifecycle management supports sustainable growth

- Why continuous content maintenance is part of a scalable acquisition strategy

- How content health affects funnel performance, CRO, and paid media efficiency

- Roles and team models: in-house owners, specialist contractors, and agency support models with pros/cons

- Tooling and repeatable processes agencies use to maintain content libraries at scale

- Governance patterns that reduce future decay: taxonomy, editorial calendars, and internal linking standards

- How these lifecycle practices reduce churn in organic performance and improve cross-channel alignment

- Frequently Asked Questions About Content Decay

- What is content decay, and how fast does it usually happen?

- What causes content decay most often: competitors, changes in intent, or technical issues?

- Which metrics best indicate content decay: clicks, impressions, CTR, position, time on page, bounce rate, or conversions?

- What exact numeric thresholds define content decay, for example, X percent drop in clicks or positions over Y months?

- Which tools can help find and monitor content decay, for example, Google Search Console, GA4, Clearscope, StoryChief, and AIOSEO?

- How can I spot content decay directly inside WordPress using AIOSEO’s reports?

- Should I delete, redirect, or update outdated content, and when should I choose each?

- How do I re‑optimize content for new search intent?

- How important is freshness, publish date, for preventing decay?

- How do new or better competitor pages cause decay, and how should I respond?

- How much do internal links and backlinks influence a decaying page?

- How often should I audit my content for decay, and what cadence works at scale?

- Can re‑promoting or building links revive decaying content, and when to invest in links?

- How do algorithm updates and SERP feature changes impact content decay?

- How can Clearscope or similar platforms automatically surface pages experiencing content decay?

- Is content decay a proper label, or is declining user interest a better description?

- What are the five lifecycle phases of content growth, and how do they affect refresh timing?

- How can tools like Revive or StoryChief identify and prioritise decaying content for refresh?

- How do I decide whether to expand, consolidate, or prune a decaying page?

- What signals should trigger re‑promotion of an otherwise complete but under‑performing piece?

- When should I update the publish date after refreshing content?

- What technical issues can cause or worsen content decay, such as site speed, indexing, and broken links?

- How should I consolidate content, 301 redirects, update backlinks, and internal links to avoid losing SEO value?

- How do I calculate the ROI of refreshing a page versus creating a new piece of content?

- How can I automate alerts and workflows, owners, SLAs, and tickets for content decay detection and action?

- How should I treat decaying product pages, pricing pages, or legally regulated pages differently from evergreen blog content?

- What governance, version control, and change‑logging practices should I use when updating content at scale?

- How do I A/B test different refresh strategies to measure which fixes work best?

- What are the SEO risks when changing URLs during consolidation, and what are the best practices to preserve link equity?

- Stop Content Decay and Protect Your Organic Growth

What content decay is and why it matters

What is content decay? Content decay occurs when old or existing content loses its search rankings, resulting in a gradual decline in a page’s organic traffic, rankings, and engagement after an initial period of performance.

It matters because it erodes compounding SEO gains, reduces qualified demand, and, if left unchecked, inflates customer acquisition costs. Not all decline is harmful, since seasonality and time-bound topics decay naturally. The key is distinguishing normal fluctuation from structural loss that requires intervention.

Content decay describes sustained declines in organic clicks, impressions, rankings, or conversions on a once-performing page.

- A working definition: a 20 to 40 percent drop in organic clicks over eight to twelve weeks with no offsetting demand change

- Scope: affects blog posts, landing pages, documentation, and resource hubs

- Measurement: track clicks, impressions, average position, CTR, and conversions

This definition separates one-off dips from a persistent downward trend that signals a competitive or technical issue.

Some practical synonyms for content decay that you can also spot people using are:

- Declining user interest: demand for the topic shrinks or shifts to adjacent intents

- SERP displacement: competitors or new SERP features absorb clicks

- Post-performance erosion: the asset no longer meets topical depth or freshness

Each term highlights a different driver, but the operational outcome is the same: less performance from your content assets.

Using precise phrasing makes it easier to diagnose the correct fix.

- If demand is steady but rankings fall, prioritize depth, E-E-A-T, and internal links

- If demand falls, consider retargeting the keyword set or updating for new intents

- If CTR drops with stable position, address titles, SERP features, and structured data

Clear language helps teams choose the correct remediation path.

The five lifecycle phases of content (with short examples)

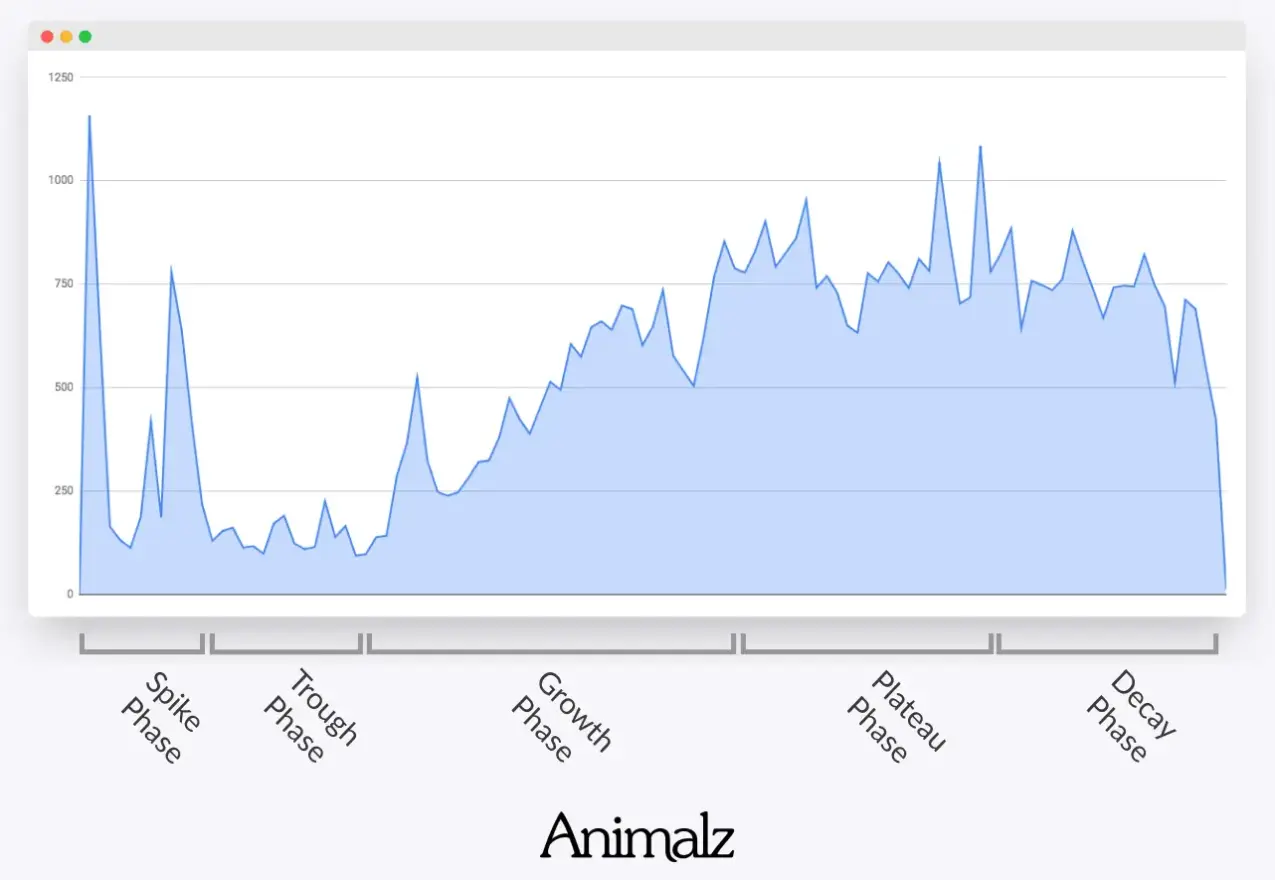

Most content follows five phases from launch to maturity.

- Spike: initial traffic burst from distribution and indexing

- Trough: post-launch cool-off as novelty wanes

- Growth: compounding links and query expansion lifts rankings

- Plateau: stable performance with minor variance

- Decay: sustained declines absent external demand shocks

Knowing the phase prevents over-editing stable assets and under-reacting to true decay.

Example scenarios clarify each phase.

- Spike: a launch post triples baseline traffic in forty-eight hours from email and social

- Through: traffic drops 60 to 80 percent after the first week as shares slow

- Growth: long-tail queries expand, lifting clicks 10 to 20 percent month over month for a quarter

- Plateau: clicks stabilize within plus or minus 10 percent over three months

- Decay: clicks fall 30 percent in two months, while impressions and position slide

Short examples create shared expectations across teams.

Match metrics to phases for faster decisions.

- Spike: high CTR, volatile position, social referrals dominate

- Growth: increasing impressions, improving average position, and new referring domains

- Plateau: steady impressions, flat position, consistent conversions

- Decay: declining impressions and position, falling CTR, fewer new links

Calibrate thresholds by content type and seasonality to avoid false alarms.

Common high-level causes of content decay (competitor content, intent shifts, algorithm updates, technical regression)

Competitors publish fresher, deeper, or more authoritative content that realigns with the dominant intent. Their coverage often adds missing subtopics, better examples, updated data, and stronger internal links, thereby narrowing topical gaps and earning links.

An effective counter includes closing depth gaps, refreshing statistics, and tightening internal anchor distribution to the target page. This approach is outlined in the content refresh frameworks discussed in Animalz’s article on content refreshing.

Intent shifts change what searchers expect to see, and algorithms reward pages that align with that shift. Transactional results may replace informational ones, or SERP features like product carousels, short videos, or AI overviews can absorb clicks even when position remains stable.

Monitoring ranking updates on Google’s Search Status dashboard helps correlate performance drops with systemic changes rather than local issues.

Technical regression undermines otherwise solid content by degrading load speed, stability, and crawlability. As load time increases from 1 to 3 seconds, the bounce rate increases by 32 percent, reducing dwell time and weakening ranking signals. Core Web Vitals regressions, noindex errors, template changes, or broken internal links can depress impressions and clicks independent of content quality.

Diagnosing causes benefits from layered evidence across search, page, and site signals. Compare your page’s coverage to the top-ranking pages and check whether missing sections map to People Also Ask or new SERP modules. Align content to the refreshed intent, then restore technical health so improvements can be crawled and evaluated quickly.

Link acquisition patterns also indicate shifting authority in the competitive set. If rivals gain high-authority links tied to the topic while your page’s link velocity stalls, expect displacement even with similar on-page quality. Rebuilding authority through refreshed assets, digital PR hooks, and internal link consolidation prevents further slippage.

Finally, changes in how Google renders results can reallocate clicks without altering average position. A stable position with a falling CTR usually indicates new SERP features or weaker titles and meta descriptions rather than loss of relevance. Addressing snippet competitiveness and adding structured data can restore click share despite unchanged rankings.

Business impact: lost leads, lower revenue, wasted content investment

Rank slippage has an outsized effect on clicks and pipeline.

- Backlinko’s analysis of 11.8 million Google searches reported a 27.6 percent average CTR for position one vs roughly 11 percent for position three

- Moving from position one to three can cut clicks by about 60 percent, compressing lead volume proportionally

- Fewer top-of-funnel visits shrink remarketing pools and email list growth

Even small ranking drops compound across quarters and reduce revenue predictability.

Content decay amplifies acquisition costs and wastes sunk content spend.

- Ahrefs found 96.55 percent of content gets no Google traffic, underscoring how weak performance burns resources

- HubSpot documented a 106 percent increase in organic traffic by updating and re-promoting historical posts

- Refreshing winners is cheaper than net-new creation and recovers existing link equity

Redirecting budget to refresh top decay candidates improves ROI within one to two sprints.

Organic losses force greater reliance on paid channels.

- SparkToro’s 20204 analysis estimated 58.5 percent of Google searches end without a click, so paid patches do not always recover lost organic demand

- Rising CPCs magnify the margin hit when organic capture erodes

- Branded search leakage worsens when competitors occupy SERP features around your queries

Protecting durable organic coverage reduces volatility in blended CAC.

When decline is normal vs when it needs action, how to detect content decay, and which metrics indicate it

Some declines are expected and need no fix.

- Seasonal content should be judged on year-over-year windows, not month-over-month

- Time-bound posts, such as news and launches, naturally decay after the event’s half-life

- Product sunset or policy-change pages may be archived rather than refreshed

Use context to avoid over-optimizing assets that did their job.

Act when leading indicators confirm structural decay.

- Three consecutive 28-day periods with at least 20 percent click declines and a two-position or more position drop

- Falling impressions with stable interest signals a demand shift, while stable impressions with worse position signals competitive loss

- A stable position with a CTR drop indicates SERP feature crowding or weak snippets

Thresholds guide triage and keep teams focused on material risk.

Use a simple detection workflow and schedule refreshes.

- Start in Search Console: compare clicks, impressions, position, and CTR over the last 3, 6, and 12 months by page and query

- Validate in GA4: landing page organic sessions, engaged sessions, and conversions, segmented by device

- Inspect the live SERP for features and competitor depth, log deltas, and map updates into a content calendar

A lightweight cadence with monthly checks and quarterly refresh windows keeps decay from compounding while minimizing thrash, which sets you up to spot issues quickly through a repeatable monitoring routine.

How to do a content audit to detect content decay

How do you detect content decay on a weekly or monthly cadence? Set up a recurring review that tracks search and engagement metrics, checks technical quality signals, and compares short windows against long-term baselines.

Add clear thresholds and automated alerts so drops surface fast, then confirm in Search Console, Analytics, and simple log views. Close each review by queuing diagnosis work. Always adjust for seasonality and low-sample volatility before acting.

1. Choose primary metrics to watch

Content decay is a sustained decline in visibility or engagement relative to past performance. Track search-side demand and ranking exposure, then confirm on-site behavior and outcomes. Use one page-level sheet per URL to make trends easy to scan.

Clicks, impressions, click-through rate, and average position show search demand and competitiveness. Sessions, average engagement time, engagement rate, and conversions validate impact after the click. Use consistent attribution models so week-to-week comparisons remain valid.

In Google Search Console, the Performance report defines clicks, impressions, CTR, and position precisely, preventing misinterpretations caused by third-party definitions. Review the official metric definitions in the Search Console documentation to ensure consistency across teams, as differences in aggregation and filtering can affect outcomes. The Search Console metrics glossary clarifies how to filter by page, query, or country in the Performance report.

- Maintain one metric table per URL with columns for clicks, impressions, CTR, position, sessions, engagement rate, average engagement time, and conversions

- Use the same date granularity for all metrics, daily or weekly, to avoid false variance

- Split conversions into primary and assisted to catch shifts in user journeys

- Record publication date and last updated date to explain natural lifecycle effects

These habits reduce noise and help you spot real decay rather than normal variance.

2. Track defensive quality signals: Core Web Vitals, speed, and indexing

Quality regressions often precede traffic loss. Monitor Core Web Vitals, page speed, and indexing status as early-warning signals. Review both field data and lab diagnostics.

Core Web Vitals thresholds define pass or fail at the 75th percentile of page loads: LCP at or under 2.5s, INP at or under 200ms, and CLS at or under 0.1. Field data from Chrome UX best reflects real users, while lab tests help isolate causes. Google’s guidance on thresholds and the 75th percentile is published on web.dev.

Indexing status changes can hide a page from search or reduce eligibility for rich results. Check for sudden switches between Indexed and Crawled but not indexed, soft 404s, canonicalization mismatches, and noindex. Confirm that XML sitemaps include the page and match canonical URLs.

Server and network metrics also signal impending decay. Watch for 5xx spikes, timeouts, TLS errors, and increased TTFB. The Search Console Crawl stats report reveals fetch errors, response sizes, and latency trends that correlate with traffic drops.

- Track Core Web Vitals pass rates by device and country to catch regional regressions

- Monitor average LCP and INP trendlines next to code deploys to connect causes

- Validate index coverage status and canonical target weekly for key URLs

- Ensure sitemaps refresh after URL or canonical changes

Confirming these items weekly prevents slow performance drift from turning into traffic loss.

3. Set time windows and baselines (90 days vs 12 months, seasonality)

You need both short-term and long-term views. Use a short window for detection and a long window for validation. Compare year over year when seasonality is strong.

A practical pattern is the last 28 days vs the previous 28 days for detection and the last 90 days vs the same period last year for validation. For fast-moving topics, add last 7 days vs prior 7 days with cautious thresholds. Use the same weekday alignment to avoid weekend skews.

Define a baseline as a rolling median, not a simple average, to reduce outlier impact. Keep a 12‑month baseline for evergreen pages and a 90‑day baseline for newsy posts. Google Analytics supports date range comparisons that preserve dimension filters and segments in reports, as documented in the GA4 help.

- Short window: 7 or 14 days to catch fresh drops quickly

- Primary window: 28 days for stable alerting with enough data

- Validation window: 90 days and 12 months to confirm seasonality effects

- Year-over-year comparison: same weekdays and holidays to align demand patterns

Content decay differs from post-hype normalization, and long-window context keeps responses proportional in ways that keep effort focused.

4. Define concrete thresholds and rules of thumb for alerts

Alert rules must balance sensitivity and noise. Set different thresholds for high- and low-traffic pages. Use both percentage and absolute cutoffs.

For discovery, trigger an alert when clicks fall 30 percent or more from the 28‑day baseline for two consecutive weeks. Flag a CTR drop of at least 25 percent with a position change of less than 0.5 to diagnose snippet or SERP-feature shifts. Alert on a two or more average-position drop for queries with at least 100 impressions in the last 28 days.

For engagement, trigger when average engagement time per session falls 20 percent or more with stable traffic, which often signals content relevance drift.

For conversions, alert on a 25 percent or more drop in conversion rate with stable sessions, which points to offer or UX changes. The official Search Console definitions for position and impressions help interpret these rules in the Performance report reference.

- Evergreen guides: clicks at least 30 percent down vs 28‑day baseline and position down by 2 or more on top 10 queries

- Product or service pages: conversion rate down by 25 percent or more with sessions stable plus or minus 10 percent

- News or trend posts: impressions down by 40 percent and CTR down by 20 percent due to SERP novelty loss

- Low-volume pages: use absolute thresholds such as 200 clicks per week down to avoid percentage noise

Use minimum sample sizes, for example 200 impressions or 100 sessions per window, to avoid false positives.

Variance increases when samples are small. For low-volume pages, switch to absolute drops and extend the window to 56 days. A simple control-chart approach around the rolling median reduces spurious alerts, as explained in time-series monitoring primers like Evan Miller’s notes on statistical pitfalls.

5. Run quick detection in Search Console, GA4, and simple logs

Start with Search Console. Open the Search Results report, filter by Page, and compare the last 28 days vs the previous period. Drill into Queries, sort by impressions, and identify clusters where position fell but impressions stayed flat.

Use GA4 to validate user behavior changes after the click. In Reports, under Engagement, Pages and screens, compare the last 28 days vs the previous period and review sessions, views, average engagement time, engagement rate, and conversions per URL. Segment by device and country to isolate changes that the aggregate view hides.

Check crawl and server signals with light log reviews or built-in reports. The Search Console Crawl stats report shows fetch counts, host status, and error types. Scan server logs or CDN logs for spikes in 404s, 5xx, or unusual user-agent patterns that align with traffic drops.

- In Search Console, add query filters for brand vs non-brand to prevent false decay on branded swings

- In GA4, annotate deploys and content updates to align changes with metrics

- In logs, check response times and status codes during detected decline windows

- For JavaScript-heavy pages, verify that HTML contains critical content for crawlers

These checks confirm whether decay is demand-side, ranking-side, or site-side.

6. Use in-browser and CMS signals (extension and CMS search stats)

A quick in-browser scan catches on-page regressions. A browser extension that surfaces titles, descriptions, headings, canonicals, robots directives, and Core Web Vitals field data via CrUX can reveal obvious issues fast. Tools like our Sprout SEO browser extension offer this page-level view without leaving the browser.

CMS-level search stats expose local drops before they appear in external dashboards. A plugin such as AIOSEO Search Statistics shows query-level impressions and positions inside the CMS, tying performance to drafts, updates, and publication times. Pair this with a visible last updated field to track freshness alongside performance.

For deeper quality checks, review structured data, Open Graph and Twitter tags, and internal link paths. Validate schema against Google’s documentation and test rich result eligibility to avoid SERP real-estate loss. Our Core Web Vitals guide provides thresholds and remediation steps that help maintain page quality over time.

- Confirm canonical and hreflang consistency to avoid duplicate indexing splits

- Reassess title and description against top queries that actually drive impressions

- Check render-blocking scripts and large hero images that can inflate LCP

- Ensure internal links from high-traffic pages still point to the target URL

These quick scans often surface fixes that reverse minor decay without large rebuilds.

7. Automate alerts: scheduled reports, anomaly detection, and diffs

Automate export and delivery so you see issues without manual checks. Use the Search Console API or Bulk Data Export to BigQuery and schedule Looker Studio emails for weekly change tables by URL. The Bulk Data Export and API documentation describe schemas and quotas in the official docs.

Leverage GA4’s custom insights with anomaly detection to watch key URLs. Configure conditions like sessions down 30 percent week over week, with conversions down 20 percent, and route alerts to Slack or email. Store daily CSVs for Search Console and GA4 extracts so a simple diff highlights winners and losers.

Create a small monitoring script or notebook that applies your thresholds and annotates deploys. Add a URL owner field and route alerts to the responsible editor or developer. Over time, track the mean time to detect and the mean time to resolve to measure process effectiveness.

- Weekly: email digest with top 20 declining URLs and their primary cause tag

- Daily: anomaly alerts on high-impact URLs only to reduce noise

- Monthly: year-over-year validation report to separate decay from seasonality

- Quarterly: threshold review to tune sensitivity as content mix evolves

Regular cadence and ownership keep the system actionable.

8. Next: diagnose root causes with data and tests

Move from detection to diagnosis with structured questions. Did demand fall, did ranking slip, or did engagement collapse? Classify pages by suspected cause before proposing fixes.

For ranking-side decay, analyze top queries and SERP features that displaced clicks, such as video, AI overviews, or People Also Ask. For engagement-side decay, review intent alignment, title and meta mismatch, and content depth. Test new intros, updated data, and improved internal links. Google’s documentation on rich results and SERP features helps interpret visibility shifts in the Search Gallery.

Turn fixes into small tests and measure outcomes against baselines. Use pre- and post-windows with matched weekdays and a holdout when possible. For larger sites, apply causal inference tools to estimate lift. The CausalImpact framework from Google showcases time-series impact estimation for interventions in the CausalImpact paper and package.

- Demand loss: expand coverage for rising related queries and refresh statistics with current year data

- Ranking loss: improve E-E-A-T signals, update headers, add missing entities, and strengthen internal links

- Engagement loss: tighten intros, clarify offers, simplify layouts, and reduce LCP and INP to speed interactions

Document hypotheses, changes, and results so you can replicate successful fixes at scale, which makes it easier to choose the right repair without guesswork.

How to diagnose the root causes of decay

Why do pages experience content decay, and how do you diagnose the root cause? Pages decay when competitors ship better answers, search intent or SERP features shift, or technical and internal issues reduce visibility and clicks. Backlinko’s analysis of 11.8 million results shows position one captures about 27.6 percent CTR while lower ranks lose the majority of clicks, and Google’s research finds a 1 to 3 second load increase raises bounce probability by 32 percent. Seasonality and algorithm updates can amplify declines, so you must map metrics to causes before choosing fixes.

interpret metric patterns (CTR down vs impressions down vs position down)

A sharp CTR drop with stable position often signals SERP changes, such as a new featured snippet or more ads crowding above the fold.

- CTR down, impressions stable, position stable: likely SERP features or title and description issues

- Impressions down, position stable: demand drop, seasonality, or deindexing of long-tail variants

- Position down, impressions stable: competitor outrank, link loss, or relevance drop

- Position and impressions down together: site-wide issues or broad algorithmic impact

Recreate the timeline in Search Console to confirm pattern alignment across queries and devices.

When the position falls, clicks fall disproportionately because CTR is non-linear by rank. In a large-scale study, position one averaged 27.6 percent CTR while position three captured roughly 11 percent, illustrating how minor rank losses cause major traffic loss. This dynamic means a drop from positions three to eight can reduce clicks by multiples, even if impressions remain similar.

If position and CTR hold but clicks fall, look for zero-click or feature inflation. Peer-reviewed work on SERP feature prominence shows significant CTR displacement when features expand, independent of rank. You should also compare device splits, because mobile SERPs compress organic results below features, intensifying CTR loss.

Use query-level comparisons to isolate where impressions dropped. A stable position with falling impressions may reflect a demand shift or canonicalization issues. Verify with Google Trends and Search Console’s URL inspection. For stable impressions with declining CTR, test new titles, align snippet content to intent, and monitor changes over a two-week window.

Competitor analysis: identifying new/better competing pages and SERP feature shifts

Competitors can overtake by publishing fresher, deeper, or more intent-matched pages.

- Track the top 10 for your head and mid-tail queries weekly

- Note publish and updated dates, content depth, media, and schema use

- Check link velocity and referring domain growth to competitors

- Record SERP features added: featured snippets, Top Stories, videos, shopping

A simple rank loss with stable impressions often indicates direct competitive replacement.

A featured snippet or other feature can siphon clicks even when your rank remains. The SISTRIX CTR study across millions of keywords shows steep CTR erosion as result types and features crowd the page, reducing the click share of classic blue links.

Research on SERP features highlights that answer boxes and People Also Ask can deflect attention, so confirm whether a competitor now holds these elements and how that correlates with your CTR.

Catalog the changes on winning competitor URLs. Use a changelog approach: headings, FAQs, comparative tables, original data, and E-E-A-T signals like author bios and citations. If a competitor added structured data that unlocked a rich result, validate their markup with Google’s Rich Results Test and assess whether adding compliant schema would regain visibility.

Intent shift detection: query analysis, SERP intent mapping, and search feature changes

Intent shifts when user needs evolve or search engines reinterpret the query’s dominant intent. Review the SERP layout: transactional elements like Shopping or local packs suggest commercial intent, while knowledge panels and videos indicate informational intent. When your page targets the wrong intent, rank and CTR degrade even if content quality is high.

Map queries to intents and validate against SERP features. For many head terms, featured snippets and People Also Ask indicate summary-seeking behavior. Backlinko reported featured snippets appear on roughly 12 percent of queries, which materially alters click pathways. Align your primary template with the observed intent, such as comparison pages for commercial investigations or detailed guides for informational searches.

Compare pre- and post-decay SERPs to quantify intent drift. Use timestamps to confirm when videos, Top Stories, or shopping modules emerged and whether that coincides with your decline. For dominant video SERPs, prioritize embedded video content and transcripts. For commercial surfaces, expand product attributes, pricing, and structured data to compete.

Technical regressions: CWV, indexing, canonical mistakes, duplicate content, and redirect errors

Technical issues can create sudden or gradual decay even when content remains strong. Google reported that moving from 1 to 3 seconds increases bounce rate by 32 percent, and faster sites often show higher engagement and conversion rates. Review Core Web Vitals, image weights, and main-thread blocking time, and validate that your pages resolve quickly on mobile networks.

Indexing and canonicalization mistakes commonly suppress impressions. A stray noindex, incorrect canonicalization to a weaker variant, or poor parameter handling can push the target URL out of results. Google’s documentation on canonicalization and indexing outlines expected behaviors and edge cases that help you debug conflicts in signals such as hreflang and sitemaps. See the canonicalization overview on Google Search Central.

Redirect errors and duplication can split signals. Long redirect chains, mixed protocols, or inconsistent trailing slashes can dilute link equity and confuse canonical selection. Validate server responses, trace redirect hops, and standardize internal linking to the preferred URL to consolidate signals.

Technical regressions often leave measurable fingerprints first.

- Sudden impressions collapse across many queries, suggesting deindexing or robots and canonical issues

- CLS or LCP regressions align with mobile CTR declines and higher pogo-sticking

- Unexpected parameter URLs gaining impressions indicate duplication and fragmentation

Address high-severity blockers first and reprocess affected URLs through inspection tools to speed recovery.

Internal causes: keyword cannibalisation, poor internal linking, content rot, or outdated facts

Internal competition and weak linking often cap relevance. Cannibalization confuses intent when multiple URLs partially cover the same topic. The strongest page struggles to consolidate signals. Internal links distribute authority and context, so sparse or misaligned anchors can slow indexation and depress rank.

Large-scale studies show many pages fail to earn search clicks because they lack links or unique value. An Ahrefs analysis of over one billion pages found that 90.63 percent receive no Google traffic, with a lack of backlinks and search demand as primary factors.

See the breakdown on Ahrefs’ study. For stale content, outdated data, or screenshots, reduce trust and engagement, which compounds CTR losses when snippets appear aged.

Audit internal links to route authority and clarify intent. Use descriptive anchors pointing to the canonical target for each topic, and add links from high-traffic pages and hubs. Remove or merge overlapping pieces so one page can comprehensively satisfy the query.

Detect cannibalization before rewriting.

- Group queries by intent and landing page in Search Console

- For a query with two or more landing pages oscillating, pick a canonical winner

- Redirect or deoptimize secondary pages to support the primary

Consolidating signals usually recovers positions without net new content.

Outdated facts undermine snippet eligibility.

- Replace aged stats with current-year data and cite primary sources

- Update schema, on-page dates, and screenshots to modern designs

- Expand FAQs and comparisons to match current alternatives

Fresh, authoritative updates improve relevance and snippet hit rates.

Algorithm updates, manual actions, and their fingerprints

Algorithm updates create step-change patterns that map to update dates.

- Site-wide drops across many queries on a single date align with core updates

- Topical sections diverging suggest classifier-specific changes

- Recovery lag despite fixes indicates reassessment cycles

Monitor Search Status Dashboard timelines to correlate movements.

Correlate your graph with public update windows. Google’s Search Status Dashboard lists confirmed updates and their durations, letting you match declines to specific events. Review the updated catalog on Google’s dashboard.

Independent analyses, such as SISTRIX’s reporting on the June 2025 Google Core Updates, show domains with visibility swings of 20-70 percent, underscoring how updates can re-weight intent and quality signals. See their data-backed commentary on SISTRIX’s blog.

Manual actions display explicit notices in Search Console.

- Look for manual action messages and affected sample URLs

- Fix violations such as unnatural links, thin content, or spam policies

- Submit reconsideration after remediations

Attributing decay correctly prevents wasted content rewrites when policy violations are the root cause.

Decide whether to update, consolidate, prune, or rebuild based on the diagnosis, a precise mapping from cause to repair steps

Map metric patterns to the most minor effective fix.

- CTR down, position stable: rewrite titles and meta to match intent, add structured data, and target SERP features

- Impressions down, position stable: check indexing, cannibalization, and demand trend. Expand to adjacent variants

- Position down, impressions stable: strengthen topical coverage, add internal links, and earn authoritative references

Implement a two-week measurement window per change to isolate lift.

Tie competitor and intent signals to content surgery.

- Competitor has a richer guide: update with deeper sections, visuals, and first-party data

- Intent shifted commercial: rebuild as a comparison or pricing page with schema and product attributes

- Video-heavy SERP: produce and embed video with transcript and clip markup

The goal is to match format and depth to the dominant intent, not just add words.

Apply technical and policy fixes before content work.

- Indexing and canonical errors: correct directives and consolidate duplicates with redirects

- Site-wide Core Web Vitals regressions: fix LCP, CLS, and INP, compress media, and defer non-critical JavaScript

- Manual action or spam risk: remediate issues first, then reconsider content investments

Stabilizing crawl, indexation, and credibility ensures content updates stick, turning diagnosis into a prioritized action plan that can be executed with confidence.

A prioritised playbook to fix decaying content

How do you fix content decay on existing pages? Identify decaying pages, score them by impact and effort, then choose to refresh, expand, consolidate, prune, or fix technical issues. Re-promote updated assets, monitor positions and CTR, and validate changes with controlled tests. Finish by deciding to update, redirect, or delete based on risk and ROI. Recheck results at 4 to 12 weeks before scaling the approach sitewide.

1. Triage framework: impact (traffic/conversions) × effort (hours/complexity) to rank pages for action

Start with a consistent scoring model so you focus on outcomes, not opinions. Measure impact using the last 90 to 180 days of organic sessions, assisted and last-click conversions, and revenue per session. Estimate effort by hours and complexity across writing, design, engineering, and stakeholder sign-off, then compute a simple impact and effort ratio to rank work.

A high-impact, low-effort page typically shows a recent decline in position, stable impressions, and a dropping CTR. Use Search Console to spot queries that slipped one to three positions because even small ranking changes move clicks materially. The top result captures around 27.6 percent CTR based on a large-scale analysis of billions of impressions from 2023 Backlinko CTR data. Weight business value higher for pages tied to conversions or high-intent queries.

Group pages into quick wins, strategic updates, and backlog. Quick wins include pages needing date refreshes, minor on-page fixes, or new internal links. Strategic updates include rewrites, consolidation, and schema expansion, while backlog groups low-potential items until new data changes their score.

2. Refresh: update facts, stats, multimedia, publication date handling, internal links, and CTAs

Refreshing stabilizes rankings when the content is still aligned with intent but no longer current. Replace outdated statistics, screenshots, and step-by-step processes, and ensure visuals match today’s interfaces. Write tighter intros, improve scannability with descriptive subheads, and adjust CTAs to align with the reader’s level of awareness.

Keep freshness signals accurate and honest. Update body copy first, then revise the last updated on-page and the Article structured data dateModified. Google documents date handling for articles and recommends accurate timestamps for clarity in search results. See Article structured data. Do not change the original publication date unless you substantially reworked the piece.

Validate results with evidence. HubSpot reported that historical optimization increased organic traffic to old posts by 106 percent in their experiment, demonstrating the power of targeted refreshes for existing URLs. See HubSpot historical optimization. Pair updates with new internal links from recent, crawled posts and from relevant evergreen pages.

3. Expand: add depth, related keywords, FAQs/schema, examples, and data where intent broadened

When search intent broadens, expansion prevents cannibalization and strengthens topical coverage. Mine Search Console for rising queries and subtopics your page almost ranks for, then add sections that directly answer these gaps. Include examples, short calculations, and templates to satisfy informational and transactional micro-intents.

- Map new H2 and H3 sections to clusters of semantically related queries with shared intent

- Add FAQs that answer explicit follow-ups surfaced in People also ask and your support inbox

- Use statistic callouts, short case vignettes, and step tables to increase utility and dwell time

- Expand image alt text and captions to reinforce relevance without stuffing keywords

- Add internal links to and from related guides to route authority and aid discovery

Expanded sections should improve task completion rate and reduce pogo-sticking, which often co-moves with CTR improvements on stable impression sets.

Close with structured data where eligible. FAQPage and HowTo markup can enhance visibility and clicks when eligible, but eligibility has changed over time. Google’s documentation explains current requirements and limitations for these result types. See FAQ structured data. Monitor rich result impressions and clicks in Search Console to validate the lift.

4. Consolidate: merge thin/overlapping pages, implement 301s, update backlinks, and internal links to preserve equity

Consolidation reduces duplication, strengthens a single canonical, and simplifies maintenance. Identify overlapping pages competing for the same head term or subtopics, then define the strongest target URL. Merge the best content from supporting pages, remove weak or redundant sections, and standardize titles, H1s, and metas.

- Implement one-to-one 301 redirects from merged pages to the target URL

- Update canonical tags and remove conflicting noindex or legacy canonicals

- Refresh internal links sitewide to point to the new target with descriptive anchors

- Audit top backlinks to the merged pages and request updates to the new URL

- Resubmit the target URL in Search Console and monitor crawl and coverage reports

A clean redirect map prevents index bloat and preserves accumulated authority.

Google documents that site moves with URL changes are supported and that redirects consolidate signals over time, so prefer 301s for permanent merges and let them persist indefinitely. See site move with URL changes. Track rankings and clicks for merged queries. Expect short-term volatility, followed by stabilization if the intent is met better on one robust URL.

5. Prune: when to remove pages (low value, regulatory risk) and safe pruning patterns

Prune content that cannot be refreshed to meet user needs, introduces duplication, or poses regulatory risk. Candidates include thin tag pages, orphaned announcements, expired promos, and outdated how-tos that cannot be made accurate. Also, review content with persistent zero impressions and zero clicks over 12 months where no internal links justify retention.

- Prefer 301 to a close substitute when a clear replacement exists

- Use 410, gone, when removal is intentional and no alternative is appropriate

- For compliance removals, combine 410 with cache removal and structured data cleanup

- Remove internal links to pruned URLs and update sitemaps to reflect the change

- Maintain a change log for legal and analytics traceability

Large sites benefit from reallocating crawl budget after reducing low-value URLs. Google explains how unnecessary URLs can affect crawling at scale. See crawl budget guidance. Monitor coverage reports and server logs for crawl patterns after pruning.

Balance content pruning with user needs. If a page has historical backlinks but weak content, consider consolidating rather than deleting it. When in doubt, run a holdout test by deindexing a subset and measuring impact on related pages’ traffic.

6. Technical fixes: CWV tuning, canonical/redirect chains, robots/indexing fixes

Technical debt accelerates content decay by depressing visibility and user engagement. Audit Core Web Vitals, eliminate render-blocking resources, compress images, and adopt server-side or static rendering where feasible. Reduce template bloat and prefetch critical routes to cut time to first interaction.

- Flatten redirect chains. Keep to a single hop where possible to preserve crawl efficiency

- Fix inconsistent canonicals, hreflang mismatches, and duplicate content from URL parameters

- Remove accidental noindex and disallow rules that block strategic templates

- Ensure structured data validity and match on-page content to avoid manual actions

- Serve sitemaps only for indexable, canonical URLs to guide discovery

As load time increases from one to three seconds, the probability of bounce increases by 32 percent, and from one to five seconds by 90 percent, which quantifies the engagement risk of slow pages. See Think with Google speed data.

For deeper performance tuning, confirm thresholds and diagnostics in a dedicated Core Web Vitals guide that covers measurement and remediation across templates. Field data from the Chrome UX Report should drive prioritization, as lab scores can diverge from the user experience. Pair fixes with a change freeze for one to two weeks to isolate the impact and reduce confounding.

7. Re‑promote and rebuild authority: outreach, internal link boosts, paid social, and link reclamation

Promotion combats decay by renewing discovery and earning links. Announce substantial updates to your email list and social channels and pitch relevant sections to journalists or newsletter editors. Add contextual links from fresh high-traffic posts and cornerstone pieces to route authority.

- Compile unlinked brand mentions and request proper attribution to reclaim links

- Republish a condensed version on partner publications with a canonical link back to the original

- Use modest paid social boosts to seed engagement on platforms where your audience is active

- Submit updated visuals to image-heavy platforms with links in descriptions

- Share data or templates as standalone assets that attract references

A large-scale analysis found 94 percent of content earns zero external links, underscoring the need for deliberate promotion and outreach beyond publish-and-pray tactics. See Backlinko 912M post analysis. Track assisted conversions and referral traffic to quantify ROI from promotion waves.

Treat internal links like a promotion lever. Add links from page templates, nav, and related articles, and ensure anchor text reflects the updated angle. Re-crawl the site after changes to confirm link graph updates.

8. Experiment and validate: content A/B tests, compare CTR and positions over 4–12 weeks

Validate improvements instead of assuming causality. For templates, run SEO A/B tests using server-side bucketing to compare control and variant pages under similar conditions. For single URLs, stagger updates and track positions, CTR, and conversions over 4 to 12 weeks to account for reindexing and seasonality.

- Define a primary KPI per test, such as CTR for title tweaks, positions for content depth, or conversions for CTA changes

- Hold other variables constant. Avoid simultaneous changes that obscure attribution

- Predefine a minimum detectable effect and test duration based on traffic volume

- Use non-parametric tests when data are skewed. Avoid over-interpreting small uplifts

- Archive test results to inform future playbooks and avoid repeat failures

Public case libraries show meta title experiments can produce meaningful uplifts on mature pages. Multiple documented tests report positive lifts of mid-single to low-double digits, depending on the query mix. See SearchPilot SEO A/B tests. Validate learnings with follow-up experiments to ensure portability across templates.

Communicate outcomes with confidence intervals, not just point estimates. Flag winners for rollout, iterate on near-misses, and revert clear losers. When results are inconclusive, extend the test or adjust the hypothesis to target a clearer mechanism.

9. Choose between update, redirect, or delete using the decision trees and risk checklist

Decide action using two axes: business value and fixability. Update when the topic still maps to a priority keyword, search intent is stable, and content gaps are solvable within a reasonable effort. Redirect when stronger substitutes exist, or consolidation will resolve cannibalization and improve authority concentration.

- Use delete, 410, only when the topic is obsolete, risky, or misleading, and no substitute exists

- Prefer to update when the URL has strong backlinks or branded demand, that a new URL would dilute

- Choose redirect when multiple URLs split impressions, or when migrating to a canonical pillar

- Escalate legal, compliance, and medical claims to subject-matter review before any decision

- Document the rationale, expected KPIs, and rollback plan for each action

Mitigate risk by snapshotting current rankings, exporting top linking domains, and preserving analytics annotations. After action, monitor coverage, impressions, CTR, and conversions for at least two re-crawl cycles to confirm the decision delivered expected outcomes. If metrics degrade beyond tolerance, roll back quickly and reassess alignment with searcher intent, which keeps the focus on measurable recovery rather than churn.

Measure impact, automate detection, and build governance

How do you prove recovery from content decay, scale the work, and prevent repeat decay? Define clear KPIs, calculate ROI for refresh vs new content, set a recurring audit cadence with SLAs, and automate detection with alerts and syncs. Track every change with version control, prioritize via a scoring model, and embed sign-offs to move work fast without losing control. Close the loop by reporting conversions and revenue impact. Reassess thresholds quarterly to reflect seasonality and algorithm shifts.

1. KPIs to report: recovered clicks, impressions, CTR change, position deltas, conversion lift, ROI of refresh vs. new

Track performance using consistent definitions so trends reflect real recovery, not noise. Use clicks, impressions, CTR, and average position as your primary recovery set, then layer conversions and revenue.

Google documents how Search Console calculates clicks, impressions, CTR, and position to avoid misinterpretation of deltas across devices and queries, which helps standardize reporting across teams. See the Performance report definitions in the Search Console Help Center.

Contextualize CTR changes with benchmarked expectations to set realistic targets. A large portion of CTR is driven by rank and SERP features.

The SISTRIX study measured desktop CTR at 28.5 percent for position one, 15.7 percent for position two, and 11.0 percent for position three across millions of keywords, highlighting why position delta belongs in recovery KPIs. See SISTRIX CTR study. Combine those benchmarks with your branded vs non‑branded split to isolate decay recovery from brand demand.

Translate recovery into funnel impact to prove business value. Map recovered clicks to conversions and revenue using your analytics goals and attribution model. Report both absolute gains, such as plus 12,400 clicks and plus 140 leads, and efficiency metrics, such as CPA and ROAS, to compare refresh ROI against net-new content in the same period.

- Core recovery KPIs to include in every report:

- Recovered clicks and impressions vs baseline and vs prior period

- CTR change and average position delta at page and query levels

- Assisted and last-click conversions, revenue, and CPA or ROAS shift

- Share of voice in target clusters and cannibalization resolved

- ROI: refresh cost vs incremental revenue relative to new content

Tie each KPI to an explicit threshold, such as plus 20 percent clicks quarter over quarter, so status can roll up with simple green, yellow, or red signals.

2. How to calculate ROI: estimate traffic-to-lead and lead-to-revenue to compare refresh vs. new

Use a simple, auditable model:

- Estimate incremental sessions from refresh using clicks delta from Search Console, adjusted for non‑Google traffic mix

- Apply conversion rate to leads, visits to leads, and sales conversion, leads to sales, from analytics or CRM

- Multiply by average order value or revenue per deal to get revenue impact

- Compare incremental revenue to total refresh cost, research, writing, design, and QA for ROI. Repeat for a comparable new article

- Prefer median assumptions over averages to reduce skew from outliers

State ranges for each assumption and show best, base, and worst cases to communicate uncertainty.

Model a worked example to drive alignment. If a refresh adds 8,000 monthly clicks, with 70 percent landing on target pages, and your visit-to-lead rate is 1.8 percent and lead-to-sale is 22 percent, you forecast 22 sales. At 1,900 euros average revenue per sale, that is 41,800 euros per month.

If the refresh cost is 1,200 euros all in, the month-one ROI is 33.8x, while new content with zero initial rankings may need months to catch up. HubSpot reported a 106 percent increase in organic views to historically optimized posts, supporting why refresh often outperforms net new in the short term. See HubSpot historical optimization.

- Add safeguards and comparisons:

- Contrast refresh vs new using the same cluster and intent level

- Discount for cannibalization and brand queries to avoid double-counting

- Include maintenance cost in year-one ROI for both paths

- Use cohort analysis to observe decay slopes pre- and post-refresh

- Incorporate risk: new content faces higher non‑indexation risk. Ahrefs found 90.63 percent of pages get no Google traffic. See Ahrefs study

Recalculate the model after 30, 60, and 90 days to validate assumptions and update future forecasts.

3. Audit cadence and SLAs: monthly scans, quarterly deep audits, owners, and ticket SLAs

Establish a predictable rhythm:

- Monthly scans for traffic deltas, rank drops, and cannibalization flags

- Quarterly deep audits for technical health, topical coverage, and link equity

- Biannual taxonomy review to retire, merge, or replatform low-value assets

- Annual governance update covering policies, templates, and thresholds

- Ad hoc audits after major core updates or site changes

Use a rotating owner schedule to ensure coverage during holidays and peak campaigns.

Define owners and handoffs to compress the mean time to recovery. Assign a detection owner, analytics or SEO, a content owner, editor or SME, and a delivery owner, PM, with clear entry and exit criteria. Route decay issues through a standard ticket with severity labels that map to SLA clocks.

Set SLAs that reflect business impact and team capacity. For example, P1, money pages, detection to triage in one business day, triage to fix plan in three days, and content shipped in seven to ten days, depending on the scope. Service teams formalize SLA definitions and expectations. An overview of SLA components helps align scope and timing across functions. See Atlassian guide to SLAs.

4. Automation and alerts: scheduled GSC exports, GA anomaly detection, Clearscope/Revive integrations, StoryChief/GSC syncs

Automate signals so decay never catches you off guard. Schedule Search Console API exports daily or weekly for Queries, Pages, and Search Appearance, then run thresholds for multi-period drops such as 28‑day down 30 percent and 7‑day accelerating.

GA4 offers anomaly detection and custom insights that can flag unexpected dips in sessions, conversions, or landing page performance. Google documents how anomaly detection works in time series models. See GA4 insights and anomalies.

Use content intelligence and publishing syncs to keep briefs and live pages aligned. Clearscope or Revive can surface content gaps and term drift against top competitors and intent. StoryChief and similar CMS connectors can sync metadata with Search Console to validate indexation, canonicalization, and rich result eligibility.

The Search Console API reference outlines available data for programmatic checks. See Search Console API. For load experience regressions that depress rankings and conversions, reference the internal Core Web Vitals guide when setting alert thresholds.

- Make alerts actionable:

- Alert formats must include page, query cluster, drop size, suspected cause, and recommended next action

- Group pages into clusters to avoid scattered noise from single-query swings

- Include suppression logic for seasonality and campaign pauses

- Append the last change date and the owner to speed triage

- Attach a one-click ticket creation link with the correct template

Review alert precision and recall monthly to reduce false positives without missing real decay.

5. Tracking changes: version control, change logs, publish-date policies, and rollback plans

Treat content like software to achieve reproducibility. Use Git or your CMS’s revisioning to snapshot copy, metadata, schema, and media assets.

Versioning systems reduce ambiguity during rollbacks and audits. See About version control. Keep structured change logs that summarize what changed and why, referencing the ticket ID and hypothesis.

Document publish and update policies to maintain trust and recency signals. Show the updated date when material changes occur. Retain the original publish date in the schema to avoid confusing readers and search engines. When only formatting or minor fixes change, do not refresh the update date to preserve signal integrity.

Prepare rollback plans for high-risk pages. Define technical steps to restore a prior revision, revert the schema, and re-submit in Search Console if needed.

If you use WordPress, confirm that revisions are enabled and accessible so editors can restore versions without developer support. See WordPress revisions.

6. Prioritisation workflows: scoring model, ticket templates, and stakeholder sign-offs

Build a transparent scoring model so the highest-impact decay is fixed first. Combine impact, traffic, and revenue at risk, confidence, data quality and diagnostic clarity, effort, editorial and dev hours, and strategic value, cluster importance, into a single score.

The RICE framework formalizes this approach and improves consistency across teams by quantifying Reach, Impact, Confidence, and Effort. See RICE prioritization.

- Create a standard ticket template:

- Page and cluster, affected queries, and drop magnitude

- Suspected causes with evidence, content drift, SERP change, tech regression

- Proposed fix type, refresh, expand, consolidate, redirect

- Acceptance criteria and measurement plan with target KPI deltas

- Owner, reviewer, ETA, risk level, and rollback plan

A solid template reduces back and forth and shortens the cycle time from detection to deployment.

Secure fast approvals without blocking throughput. Define who must review what. Legal or brand for regulated pages, SME for technical accuracy, SEO for on-page compliance, and PM for scope and timing.

Research on decision latency shows throughput drops sharply as approvals increase. Constrain mandatory sign-offs to the smallest viable group while offering optional visibility for others. See Nielsen Norman Group on decision making and friction.

7. Next: long-term prevention tactics and how the content lifecycle ties into broader growth

Shift from firefighting to lifecycle management to reduce decay frequency. Maintain a living content roadmap that plans refresh windows when queries, products, or regulations change. Align lifecycle checkpoints with quarterly planning so refreshes and new builds share briefs, research, and measurement frameworks.

Bake quality and intent alignment into creation to slow decay. Use people‑first guidelines that emphasize originality, expertise, and usefulness. Google outlines criteria for helpful, reliable content that consistently earns search demand and links over time. See creating helpful content. Pair these standards with internal linking and schema hygiene so new pages inherit relevance and authority.

- Connect lifecycle to growth operations:

- Report recovery and prevention KPIs in the same dashboard as pipeline and revenue

- Allocate a fixed percentage of content capacity to refresh work each quarter

- Tie decay clusters to product marketing and demand gen themes

- Feed search insights to paid media to refine targeting and creative

- Close the loop by sharing wins and misses in monthly business reviews

This integration ensures content decay management compounds into lower CAC, higher LTV, and more resilient organic growth over time, which leads naturally into a scalable operating model for content health.

How content lifecycle management supports sustainable growth

Experts generally agree that content decay is inevitable as search intent shifts and competitors publish fresher assets. Ahrefs analyzed almost one billion pages and reported that 96.55 percent receive no Google traffic, highlighting how quickly value erodes without upkeep. The cadence of core updates and changing SERP features adds complexity, so lifecycle management becomes a planning discipline rather than a one-time fix.

Why continuous content maintenance is part of a scalable acquisition strategy

Content decay compounds over time because links, intent, and SERP layouts change. Older articles slip as competitors add data, examples, or new formats that match searcher expectations. Without structured updates, the same content requires more budget later to regain visibility.

Refreshes extend the half-life of winning pages. Prioritization should use traffic, conversions, and link equity to select candidates. Updates often include revalidating keywords, adding new sections, and improving depth to match current expertise standards.

Independent research shows measurable upside from systematic refreshes. The Ahrefs search traffic study found that the overwhelming majority of pages earn no organic traffic, underscoring the need to keep high-potential assets current.

Animalz popularized the concept of content decay, describing predictable, sigmoid growth followed by a gradual decline that can be countered with scheduled improvements.

Continuous maintenance scales when it runs on a predictable rhythm. Start with a quarterly audit of the top 100 to 500 URLs by sessions and conversions.

- Maintain a prioritized backlog of refresh candidates with expected impact and effort

- Group fixes into monthly sprints that mix quick wins and deeper rewrites

- Track lift using annotated time-series views for clicks, impressions, and conversions

- Align refreshes with seasonality, product launches, or pricing changes

- Build a deprecation path for topics that no longer map to intent

A standing cadence reduces bottlenecks and creates compounding gains across quarters.

Historical optimization can unlock quick wins in parallel with net-new content. HubSpot reported that updating older posts led to an average 106% increase in traffic per post and substantial lead growth on top pages. Selecting assets with existing links and strong engagement shortens time-to-impact.

Refresh systems also prevent cannibalization. Merge overlapping articles and redirect to a canonical resource to concentrate equity. Measure success by aggregate clicks to the topic cluster, not just the surviving URL.

Finally, tie refresh criteria to business value. Require a conversion goal on each refreshed page and align CTAs with current offers. Use cohort analysis to confirm that refreshed posts improve assisted conversions, not just sessions, which links lifecycle hygiene directly to revenue outcomes.

How content health affects funnel performance, CRO, and paid media efficiency

Content quality determines eligibility for high-intent queries. Backlinko’s click-through study found position one captures roughly 27.6 percent CTR, while position ten gets about 2.4 percent, which amplifies small ranking changes into large traffic swings. As decay pushes pages down, pipeline volume suffers even if total impressions stay flat.

Conversion rates degrade when content drifts from user intent or technical quality standards. A Deloitte and Google study found that a 0.1-second improvement in mobile site speed drove an 8.4 percent conversion rate lift for retail and a 10.1 percent lift for travel, showing the CRO cost of slow, bloated pages. Decay introduces outdated claims, slow scripts, and mismatched CTAs that depress lead quality.

Paid media efficiency depends on organic coverage and landing page relevance. Think with Google’s Search Ads Pause analyses reported a high share of incremental clicks from paid search, but incrementality varies with organic rank and brand strength. Strong organic coverage on non-brand terms enables precise bid reductions without sacrificing total clicks.

Map lifecycle work to funnel goals, not just rankings.

- Top-of-funnel: maintain freshness and data accuracy to win featured snippets and newsworthy angles

- Mid-funnel: strengthen comparisons, calculators, and frameworks that shepherd evaluation

- Bottom-of-funnel: align offers, pricing, and proof with current sales narratives

Close the loop by tracking assisted conversions and post-click engagement per funnel stage.

Run content health checks before scaling ad spend to avoid paying for friction.

- Validate message match and query to landing alignment for each ad group

- Confirm Core Web Vitals, especially LCP and INP, meet thresholds on mobile

- Remove redundant pages to consolidate Quality Score signals

- Use negative keywords where organic ranks consistently drive qualified traffic

Even small landing-page fixes can reduce CPA while maintaining lead quality.

Monitor decayed assets for spillover effects. As authority erodes on a hub page, internal links pass less value to dependent assets. Conversely, a refreshed hub plus improved nav can lift clusters through stronger relevance and engagement signals, which shows how lifecycle work supports channel efficiency.

Roles and team models: in-house owners, specialist contractors, and agency support models with pros/cons

An in-house model centralizes institutional knowledge and cross-functional alignment. It excels at incorporating product updates, roadmaps, and compliance into content quickly. The tradeoff is limited bandwidth for deep technical audits or large refresh cycles during peak periods.

Specialist contractors are well-suited to episodic projects such as technical cleanups, large-scale rewrites, or schema overhauls. They bring niche expertise and can move fast on defined scopes. The challenge is maintaining continuity, handling handoffs, and ensuring standards across different writers and editors.

Hybrid models often pair in-house strategy with external production or technical support. The in-house team owns priorities and acceptance criteria. External specialists deliver execution at scale. The key risk is governance. Without clear definitions of done, quality drifts and decay returns.

Match the model to the operating constraints and governance maturity.

- In-house only: control and speed for product updates, limited surge capacity

- Contractor-led: elastic capacity and expertise, higher coordination overhead

- Hybrid: balanced control with scale, requires strong playbooks and QA

Transition plans should include knowledge transfer, asset inventories, and shared dashboards.

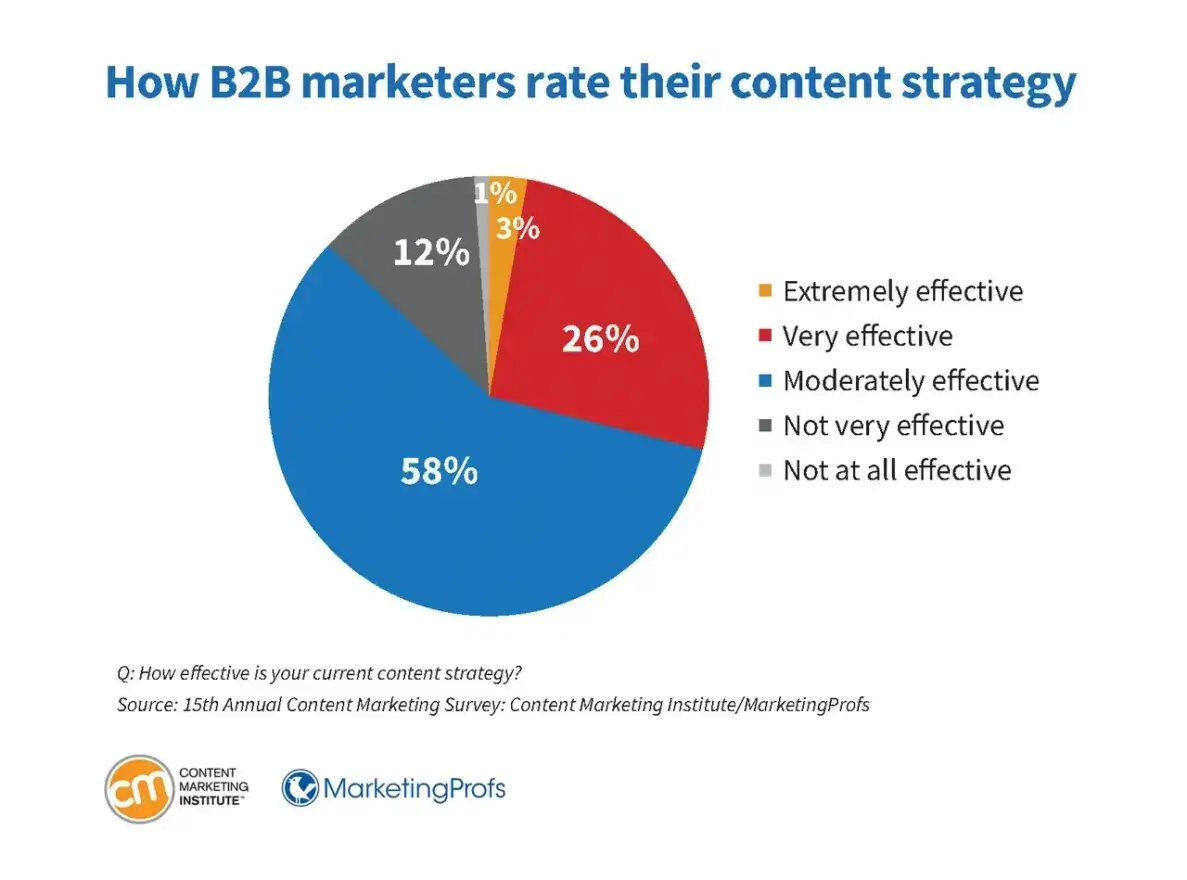

Documented processes correlate with better outcomes. The Content Marketing Institute’s B2B research reports that marketers with a documented strategy are far more likely to report success than those without a documented plan. Pair the documented strategy with a living style guide and an acceptance checklist to standardize refresh quality.

Define roles explicitly to maintain a predictable cadence. Use RACI assignments for auditing, prioritization, drafting, review, and measurement. Align editors and subject-matter experts with fixed SLAs to avoid content sitting in review queues, which maintains momentum as libraries grow.

Tooling and repeatable processes agencies use to maintain content libraries at scale

Mature lifecycle teams rely on telemetry. Google Search Console provides query-level loss detection at scale, while analytics pinpoints conversion drop-offs by page and segment. Crawlers surface thin content, broken links, and templated issues that compound decay.

A refresh pipeline benefits from automation. Use scheduled crawls, export GSC deltas, and synthesize dashboards that rank decay by business impact. Create templates for content briefs that include target intent, updated competitors, and structured data requirements.

Process discipline multiplies tool value. A weekly triage forces decisions on deprecation, consolidation, or refreshment. A monthly sprint turns those decisions into shipped changes. A quarterly review recalibrates the model using new benchmarks and updated taxonomy.

A standard toolkit covers discovery, diagnosis, and delivery.

- Discovery: GSC, query mining, and SERP feature tracking for loss detection

- Diagnosis: crawlers for internal links and status codes, Core Web Vitals monitoring, and schema validators

- Delivery: content briefs, checklists, and publishing QA with pre- and post-metrics

Add log analysis where appropriate to verify crawl parity and recrawl velocity.

GSC documentation explains how to use reports such as Performance and Page Indexing to identify loss patterns, soft 404s, and indexing gaps that mimic decay. Exporting GSC over time enables cohort analysis of refreshed pages. Using the URL Inspection API can verify whether fixes are recognized at the URL level before and after shipping.

Content briefs should encode structure that resists future decay. Capture the canonical angle, updated statistics, expert quotes, and link targets for hubs and spokes. Require schema, media optimization, and accessibility checks to protect technical quality.

Governance patterns that reduce future decay: taxonomy, editorial calendars, and internal linking standards

Governance reduces entropy in large libraries. A clean taxonomy clarifies canonical coverage, prevents duplicates, and supports internal routing. Editorial calendars enforce regular refreshes and stage coverage for launches and seasonality.

Internal linking standards are a force multiplier. A Zyppy study analyzing tens of millions of internal links found strong correlations between descriptive anchor text, link count, and higher rankings. Standards should define anchors, hub-and-spoke patterns, and the maximum click depth per cluster.

Documented strategy improves execution quality. The Content Marketing Institute reports higher success rates among teams with documented content strategies and workflows. Style guides and acceptance checklists reduce variability in updates and facilitate consistent E-E-A-T signals.

Codify governance into a short, enforced rule set.

- Taxonomy: one canonical page per intent with documented clusters

- Editorial calendar: quarterly refresh cycles with acceptance criteria and owners

- Internal linking: anchors that mirror intent, capped click depth, periodic audits

Measure adherence with automated crawls and spot checks.

Standards benefit from concrete examples and tools. Create a pattern library with model pages for each format, such as guides, comparisons, case studies, and FAQs. Maintain a schema library for article, product, how-to, and FAQ types.